Duration: 1 Week · Project Type: Individual

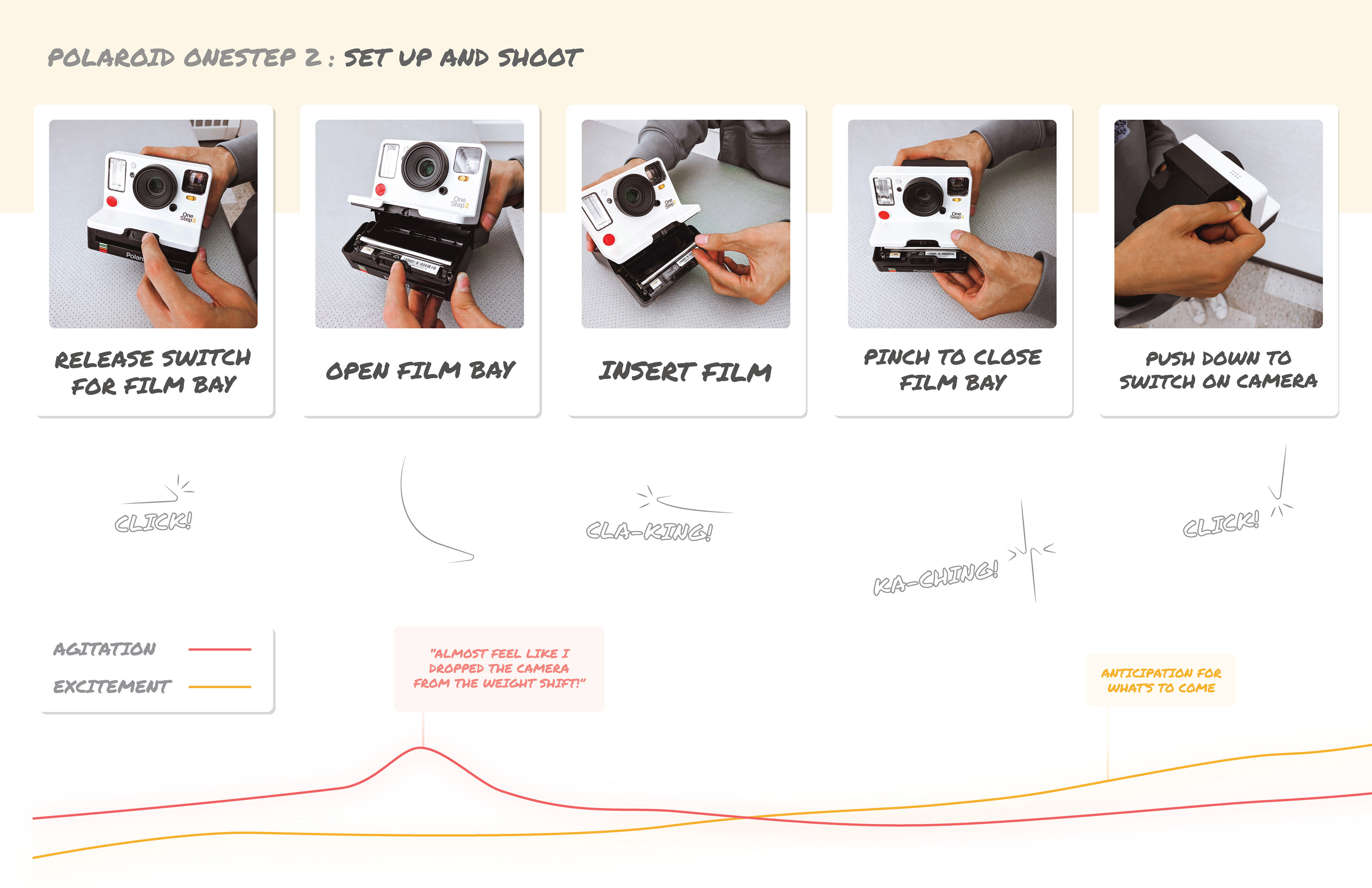

In my process, mapping the user's experience of using the camera gave me more insight on gestural data that could be used in areas in the app. This was done both as a form of homage and to make the digital interaction feel more familiar in its implementation.

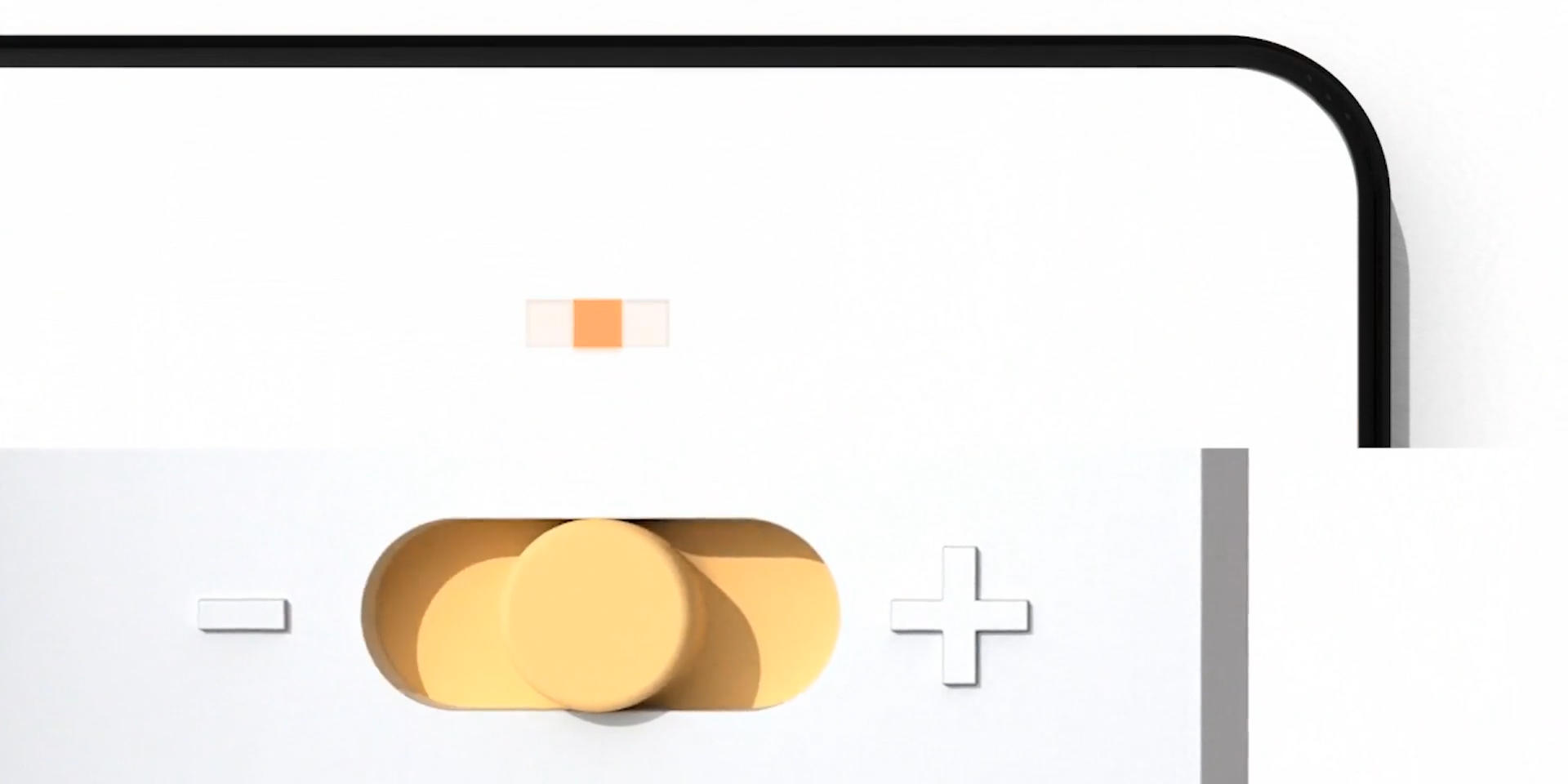

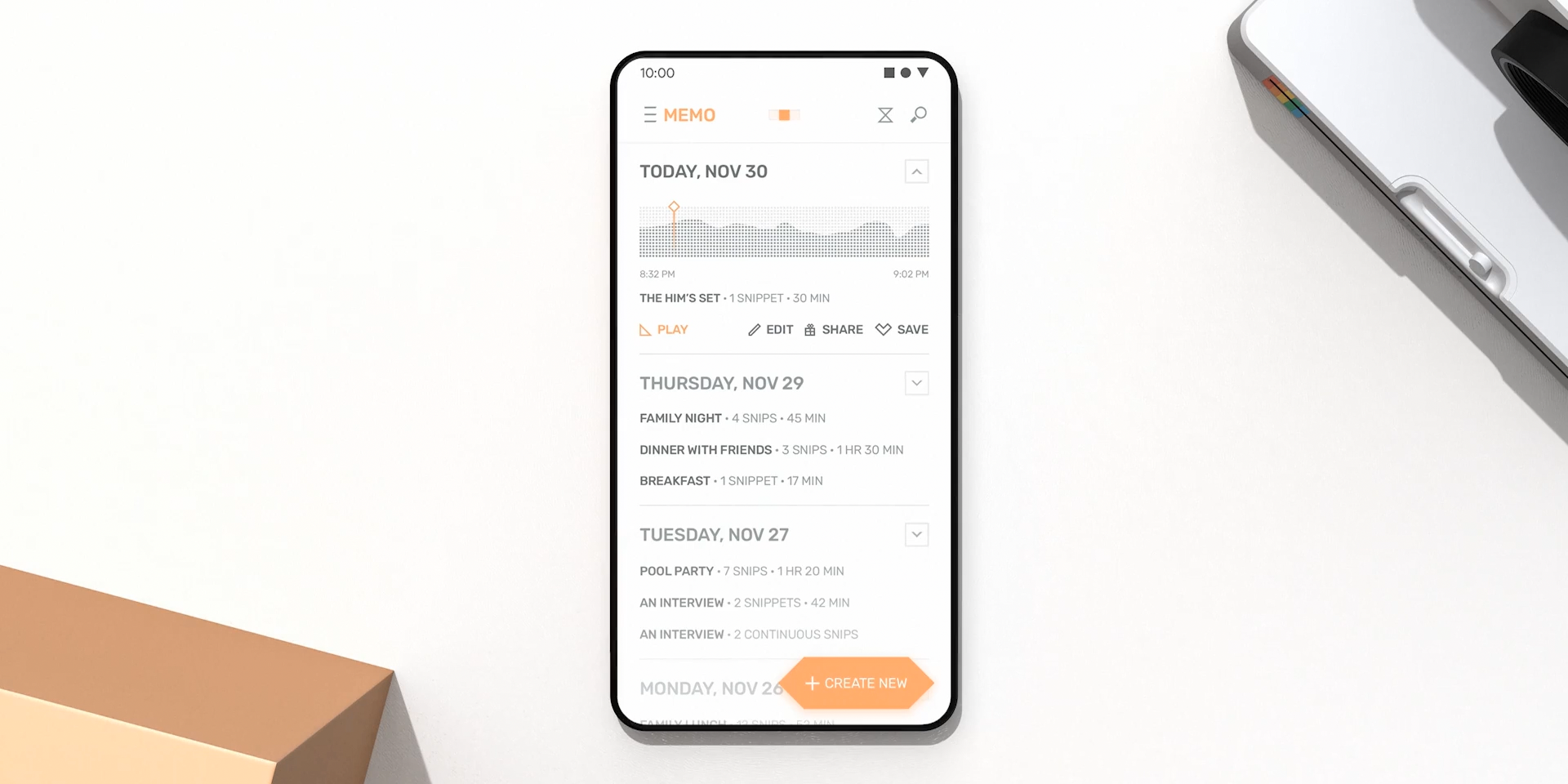

Memo has two modes, manual and automatic (like cameras today).

Manual mode is a freeform way of capturing memories that gives the user unlimited recording time.

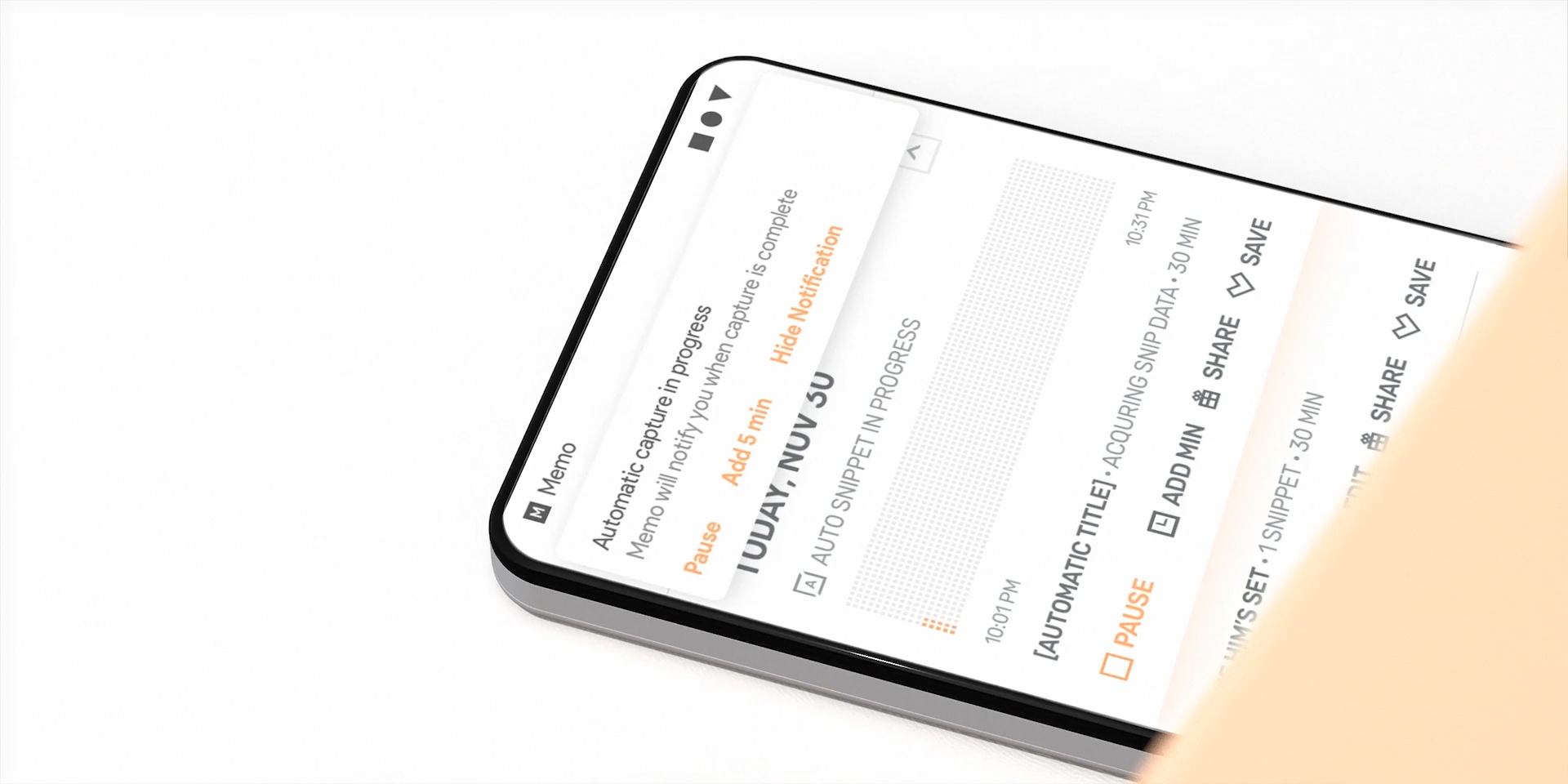

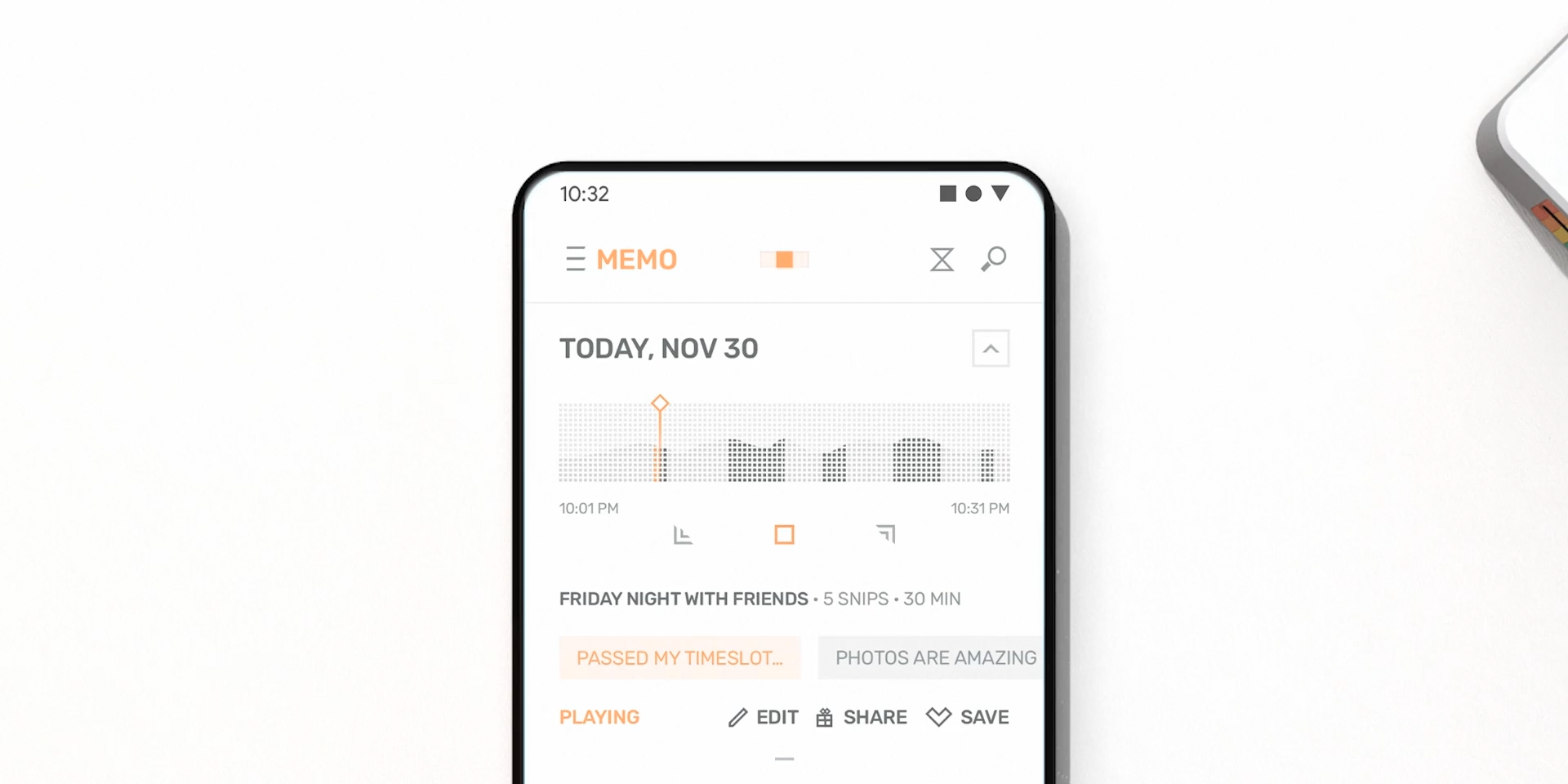

Automatic mode uses on-device artificial intelligence and machine learning to capture snippets of moments. Snippets are divided into smaller snips, and the user is able to view transcripts of things like conversations and listen back on them.

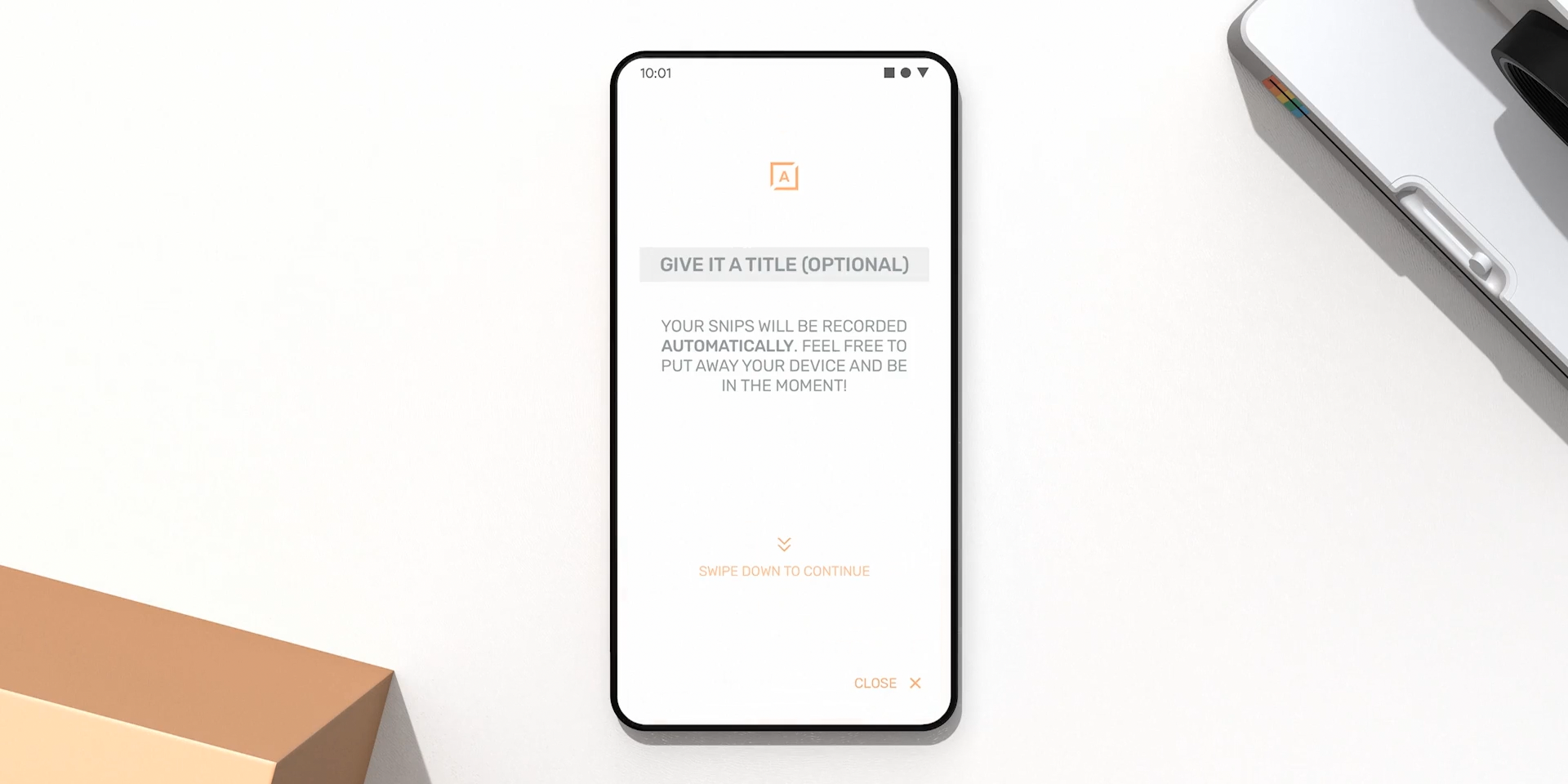

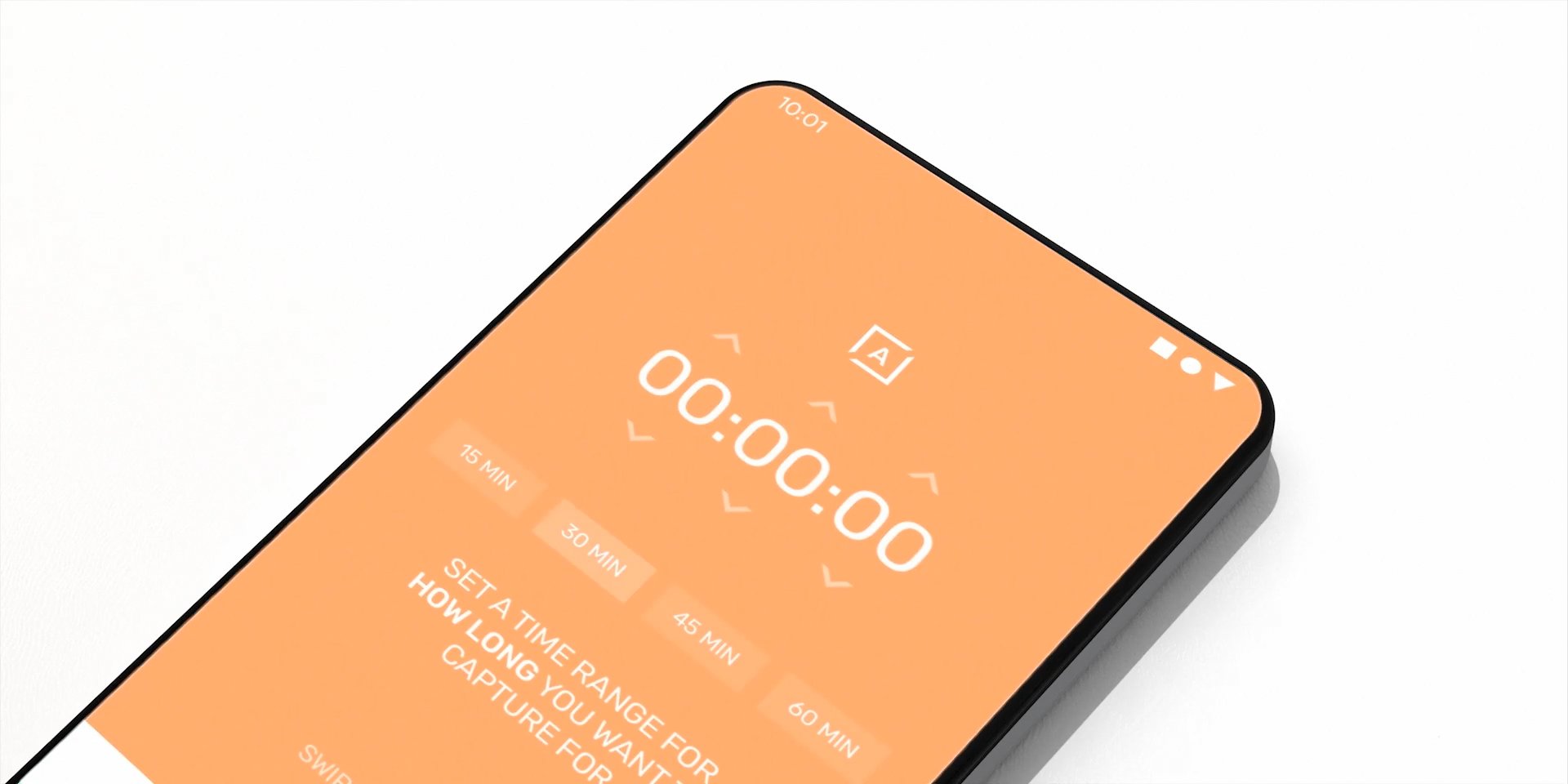

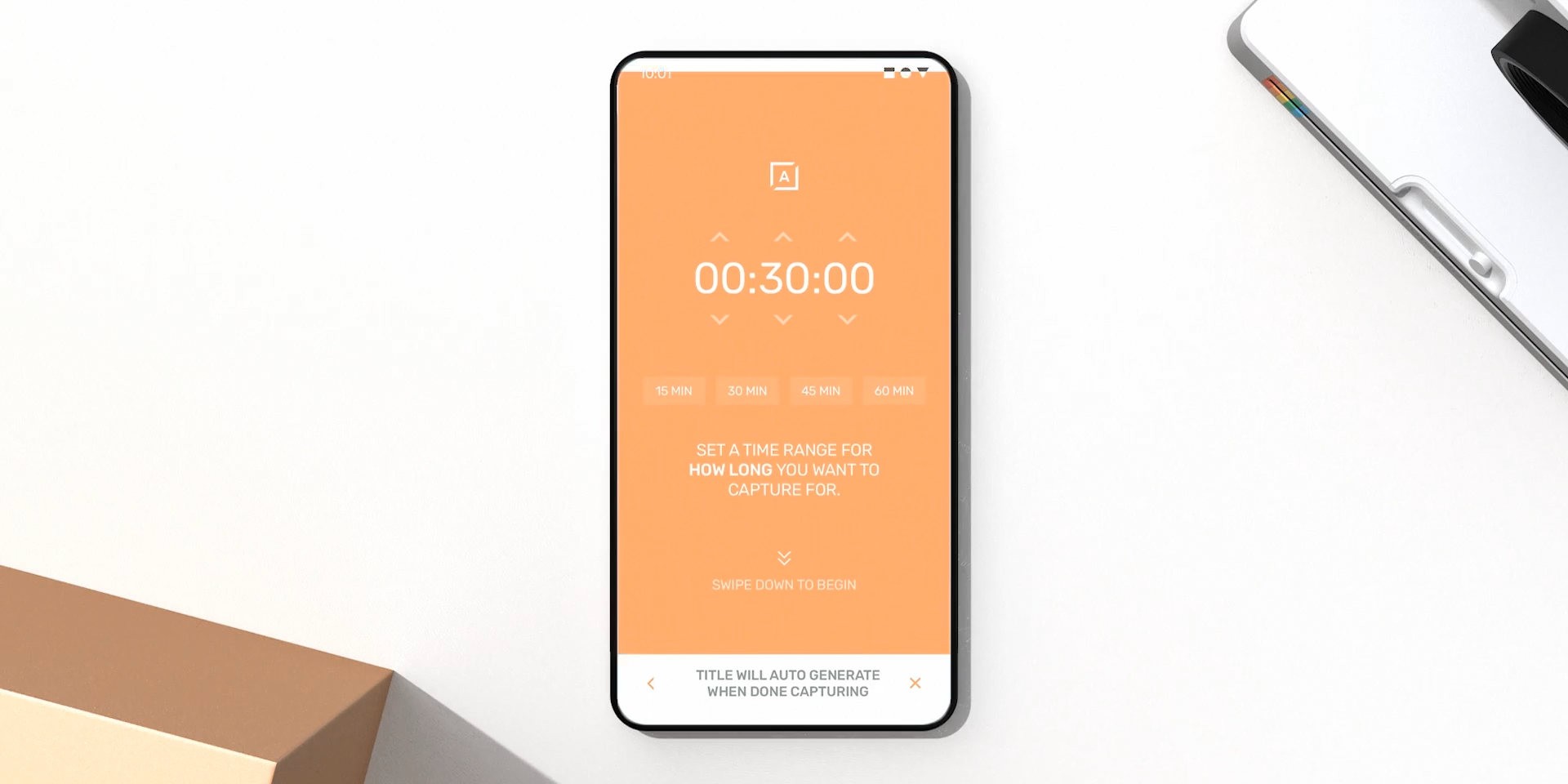

There is an element of surprise in auto mode; the user has no idea what the end result will be - they just set a time frame at the beginning.

This is a brief demonstration of the interface, walking through automatic mode.

The demonstration is created with a combination of UI prototyping, animation, CGI, and live footage with post process compositing when necessary.

The phone seen throughout the video was created specifically for this project and does not tangibly exist, so a prototype dummy was used for motion tracking and compositing the device into live action.